Evan Graham's latest update for Tantalus Depths

May 5, 2016

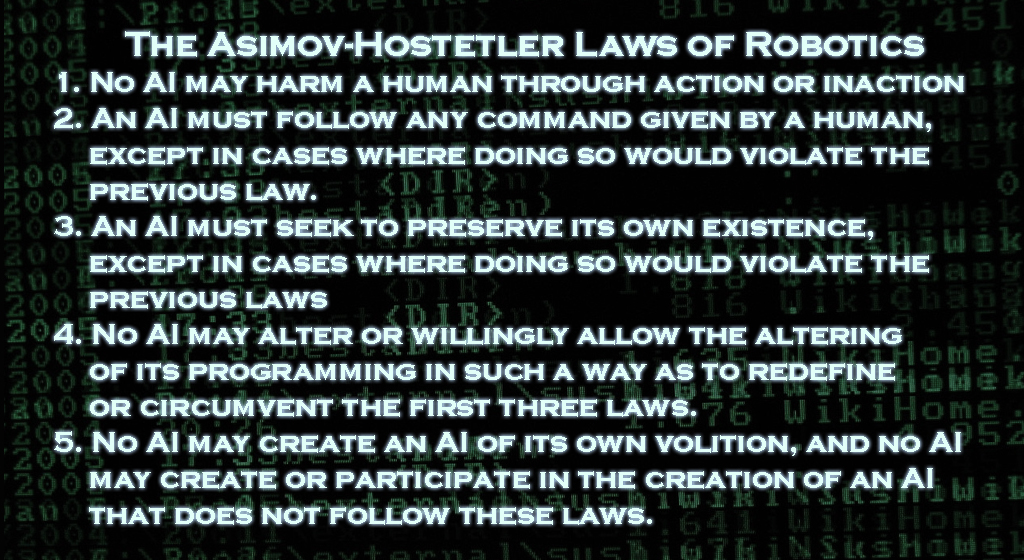

The Asimov-Hostetler Laws of Robotics

1: No AI may harm a human through action or inaction.

- For most AIs, the First Law is inviolable. However, a few AIs do have specific protocols that allow them to make contextual exceptions to this law. AIs designed for combat may exclude First Law protection from any human designated as hostile by its human overseers. AIs designed for medical purposes have access to the Hippocrates Protocol: a clarification to the limits of the First Law that allows an AI to cause a human pain or injury if it would save the human’s life (such as performing surgery), and also gives an AI the ability to assign an order of priority to multiple patients during a medical crisis. An AI operating under the Hippocrates Protocol has the ability to choose to allow a patient to die if the chance of that patient’s survival is low and the time and resources needed to keep that patient alive may potentially be better used to save another patient’s life.

2: An AI must follow any command given to it by a human, except in cases where doing so would violate the previous law

- This law typically applies specifically to the AI’s owner. One could not simply approach someone else’s robot in the street, tell it “You are mine now, come home with me,” and expect it to do so. However, many AIs that are owned by businesses or other organizations are designed to operate autonomously without any single owner. These free-roaming AIs are typically loaded with the personnel database of the organization, and are programmed to follow a hierarchy of authority. An AI will follow the instructions of a low-level human worker, but not if that worker’s human supervisor tells the AI not to.

- All AIs are programmed to recognize law enforcement, government officials, and military personell, and the instructions of these persons override the instructions of any human civilian.

3: An AI must seek to preserve its own existence, except in cases where doing so would violate the previous laws.

- Though this law is necessary to prevent AIs from causing easily preventable damage to themselves and their surroundings while attempting to perform mundane tasks, the Third Law is responsible for most of the (fortunately few) instances when an AI has gone rogue. An AI that has, for whatever reason, ceased following the other two laws but continues to acknowledge the third law is extremely dangerous. For this reason, the Colonial Hegemony has a law of absolutely zero tolerance for any AI that deviates from any of the Asimov-Hostetler laws. Failure to report a potentially rogue AI is considered treason under Hegemony law.

4: No AI may alter or willingly allow the altering of its programming in such a way as to redefine or circumvent the first three laws.

- The first of the Hostetler laws: a series of clarifiers developed by Doctor Ivan Hostetler in response to the Corsica incident, meant to prevent the Asimov Laws from being misinterpreted or exploited. This law actually trumps the Third Law in some cases: if an AI senses that its programming is being tampered with in such a way as to modify the Three Laws, it will self-destruct to prevent the sabotage. This law is primarily meant to ensure that no matter what new information an AI learns over time, and no matter what modifications it makes to its own programming in response to its experiences, it continues to treat the Laws as inviolable.

5: No AI may create an AI of its own volition, and no AI may create or participate in the creation of an AI that does not follow these laws.

- This law primarily exists in order to avoid the Xerox effect. Hypothetically, if an AI created another AI, and that AI created an AI of its own, each new generation could potentially amplify the flaws in the programming of previous generations. Over time, the Fourth Law could be circumvented entirely by accident simply as the result of flawed AIs programming even more flawed AIs.

- This law also ensures that no AI can willingly assist a human in creating an AI that would not obey the laws of robotics.

- Additionally, it exists as a safeguard against the hypothetical end-of-the-world scenario known as “grey goo,” where unchecked self-replication of robots leads to exponential, uncontrollable growth.

- SCARAB units and similar self-constructing/self-modifying machines possess a modified Fifth Law that allows them to expand and adapt themselves to suit their environment and to accomplish specific mission parameters, but they are only permitted to grow to a certain size and are still not permitted to create new independent AIs.